Today, software is everywhere: on our phones, on our vehicles, on our televisions, on our household appliances… It is at the heart of innovation and allows companies to be more competitive and to provide more value to their customers. But it has not always been like this.

There have been times when software was a source of trouble and even its future as a viable industry was in question. Join me in this brief and very personal review of the history of software development to understand where we came from, where we are, and what the future may hold.

The First Years of Software (1960-1979)

In the beginning there was hardware. Software was just a minor activity inextricably linked to hardware and programming was considered a minor activity. There were no development standards or good practices, and instead each company or research center followed their own internal procedures, normally based on “trial and error” models. Programming was closer to craftmanship than to engineering.

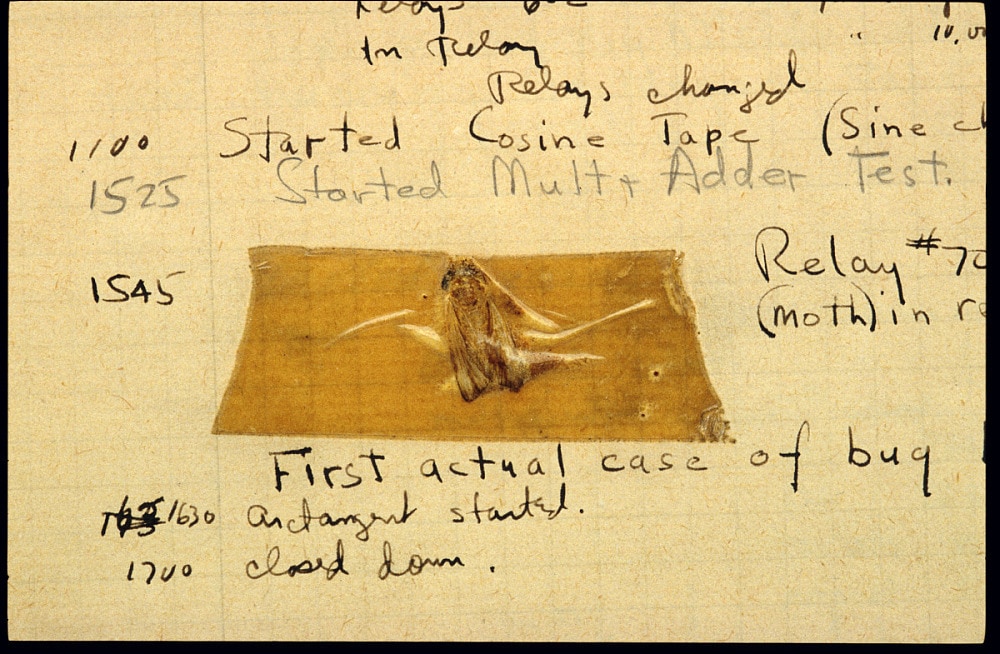

Even bugs were not software flaws but actual living creatures that were found inside mainframes that disrupted computer electronics. Here is the original incident report with the actual moth that coined the new term.

First actual case of bug being found, Harvard, 1945.

Due to a lack of consistency in the development lifecycle, a lot of programs were routinely delivered late and with defects, which raised serious doubts about the ability of software development to become an industry capable of tackling complex problems. This period is known as the “software crisis”.

Although I didn’t live through this period personally, I would like to share with you an anecdote that I find very illustrative of how things have changed since then. A few years ago, an older co-worker told me that, at the beginning of his career, computer scientists wore a white coat. Every morning, all the employees of the company where he worked waited for him to arrive, open the door of the data center and hand them out reports with the list of tasks that each employee had to do that day.

At that time, engineers had the monopoly of managing computers and the information they generated, and no one questioned their work. “These damned PCs have caused us a lot of trouble” my co-worker used to complain. To me, who grew up surrounded by personal computers, this statement was very surprising, but I understood that losing his share of power must have been very traumatic for him.

The Industrialization Process of Computers (1980-2000)

Starting in the 1980s, personal computers democratized access to computing power and definitively decoupled software from hardware. For those interested in learning more about this period, I recommend watching the documentary “Silicon Cowboys” which describes how a group of executives from “Texas Instruments” founded the company “Compaq” to create the first personal computer compatible with IBM PCs and how they used reverse engineering to replicate IBM’s BIOS, its only proprietary component.

To face the increase in the demand for software, while avoiding the problems identified in the previous period, software development advances towards industrialization to gain greater reliability and efficiency. Software adopts practices inspired by traditional engineering and the concept of software engineering is consolidated. These are the golden years of requirement documents, estimates, detailed waterfall plannings, and manual validation processes.

As a result, the spotlight in software development shifts from developers to managers and processes. New business models such as “software factories” arise, which relate software development to production lines, where requirements are fed at one end and software and its associated deliverables magically come out at the other end. Software development thus becomes a low added-value activity, which in many cases is outsourced off-shore.

Industrialization also affects the dress-code and white coats are abandoned in favor of suits and ties.

The Agile Manifesto and the Restoration of the Original Spirit (2001-2020)

In the late 1990s, software development is a thriving industry, but it is not free from problems.

On the one hand, those companies that have adopted outsourcing more intensively suffer from technical knowledge decapitalization, which increases their dependence on suppliers and limits their ability to use technology as a source of competitive advantage.

Additionally, programming as a profession is highly devalued and affected by a high degree of intrusion from other disciplines that were brought in to cope with the high levels of demand.

Finally, there is a great disconnect between the so-called business areas, which need technological solutions to operate and develop, and the technical areas, responsible for providing these solutions.

In response to this situation, in February 2001, a group of experts published the agile manifesto, a set of 12 principles that try to put programming back at the center of the software development process. They claim the specificities of software development within engineering, which is expressed through the following preferences:

- Individuals and interactions over processes and tools

- Working software over comprehensive documentation

- Customer collaboration over contract negotiation

- Responding to change over following a plan

In short, their objective is to recover the creative nature of software development, encouraging the interaction of programmers with all stakeholders and recognizing the need to accept change as a natural part of the process.

Based on these principles, new frameworks such as SCRUM or eXtreme Programming (XP) are developed, which are the most frequently used today for software development worldwide. The success of these new ways of working has led to new organizational models such as SAFe (Scaled Agile Framework), based on the same principles.

DevOps is also developed in this period (2007) as a way to integrate the work of development and O&M teams, promoting the adoption of agile practices to reduce the duration of development cycles (Lead time). DevOps works on 3 levels:

- Encouraging reception of feedback through techniques such as monitoring metrics, A/B testing, etc.

- Reducing the time needed to obtain feedback, relying on automation processes and continuous integration.

- Adopting a business culture that favors continuous improvement.

Finally, cloud computing resources become easily available through public clouds provided mainly by AWS, Google and Microsoft (who launched their services in 2007, 2008 and 2010 respectively). As a result of this, hardware is virtualized and the shift to a new era of software centricity is completed.

Using these 3 technological breakthroughs (Agile methodologies, DevOps practices and Cloud computing) as driving forces, we have entered a golden age of software development, reaching levels of efficiency and adaptability that were unthinkable just 20 years ago. As a reference, the “State of DevOps” report, published annually by Google, establishes in its 2022 version that the most advanced companies are today capable of deploying software several times a day (some, like Amazon and Netflix, up to hundreds of times a day) and have an average lead time of between 1 day and a week.

However, some criticism has arisen in recent years from a growing number of experts who believe (as in this article) that agile methodologies have ended up departing from the original agile principles and that, in some cases, they generate a culture of constant supervision and demand that ends up being toxic.

The perception of software development professions reaches its highest level during this period, with the arrival of Internet giants such as Google, Facebook and Netflix. This greater recognition is also transferred to the previously maligned “Nerd” aesthetic (glasses, T-shirts, jeans and sandals) that becomes mainstream.

The Future of Software

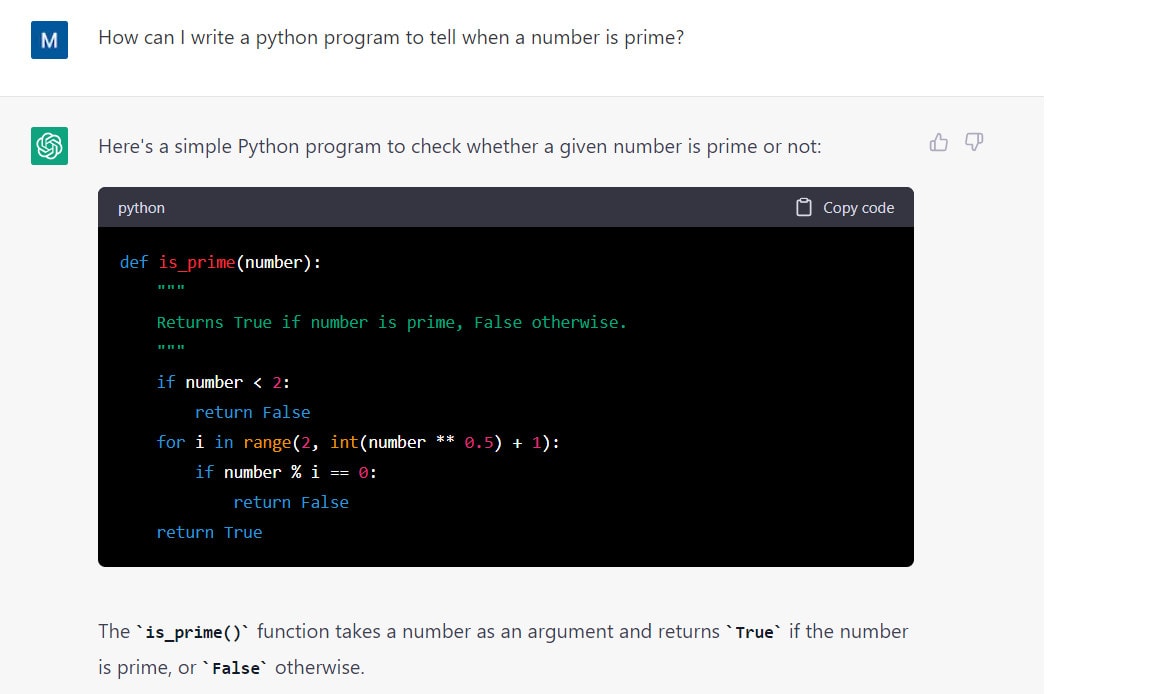

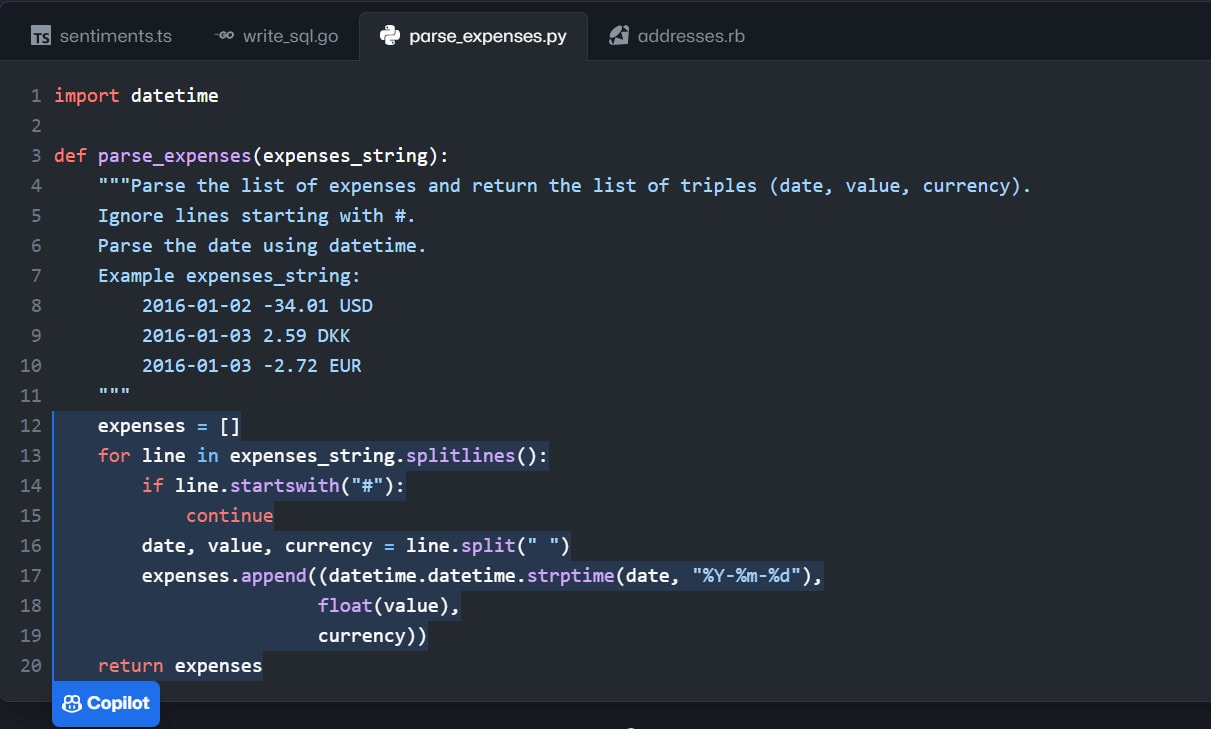

And what can we expect in the future? Innovations are created constantly in this industry, some of them with a high potential for disruption. For example, we can highlight Low Code / No Code platforms or artificial intelligence services similar to ChatGPT such as Ghostwriter chat or Copilot that can provide the source code needed to implement a specific solution upon request. Here are some basic examples using ChatGPT and Copilot:

All of these novelties have raised questions about the future of software developers, which some believe could eventually disappear. In my opinion, we should not fall into nostalgia, like my older co-worker, or let ourselves be carried away by fear.

I am sure that the future will bring changes and that some tasks that today are executed by programmers will end up being carried out by non-technical users or by algorithms. But these changes will also bring opportunities in the form of new work models, new tools, or even new professions that will help us take the software development industry to the next level.

There are no comments yet